There's a lively discussion on HIStalk (Readers Write 8/27/08) regarding the merits of CCHIT. The Jim Tate piece isn't long, or even that informative. It simply touts CCHIT as an

There's a lively discussion on HIStalk (Readers Write 8/27/08) regarding the merits of CCHIT. The Jim Tate piece isn't long, or even that informative. It simply touts CCHIT as an

.. organization that is helping level the playing field and make it safer for clinicians to take the plunge into electronic records.

This seems innocuous enough. Judging by the negative responses though, some people have real problems with CCHIT. In particular, the Al Borges, MD Response to "CCHIT: the 800-Pound Gorilla" is a detailed point-by-point rebuttal ("CCHIT is simply not necessary.").

I guess it's not surprising that the biggest issue seems to revolve around money. The cost of obtaining CCHIT certification has stratified EMR companies (big vs. small) and makes it even more difficult for practices to see a ROI.

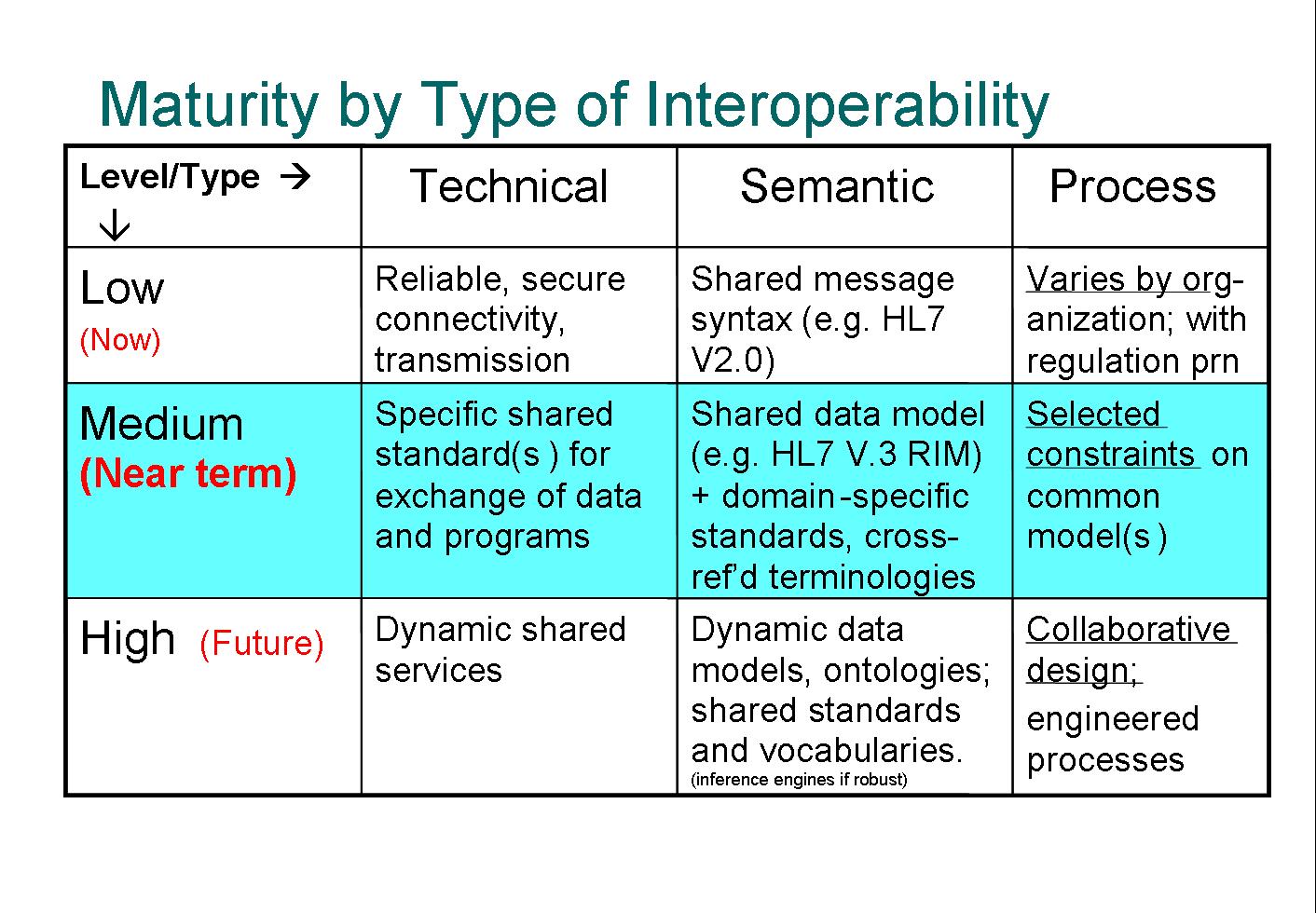

Also, the discussion of interoperability standards (or lack thereof) is one of my hot buttons. As Mahoghany Rush (comment #9) says:

Anyone here who talks about being HL7 compliant - and thinks it really solves a problem - has never personally written an interface.

So true.

In this regard Inga asks (in News 8/29/08):

Are lab standards an issue one of the various work groups is addressing? Are the labs on board?

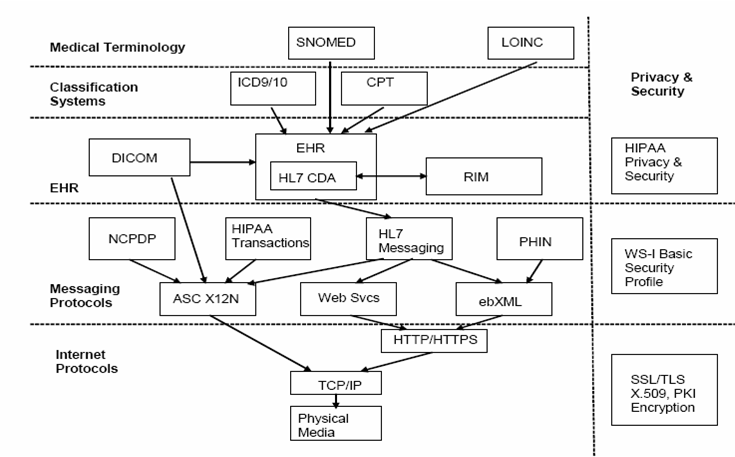

When you say "lab" what you're really talking about are the large number of medical devices commonly found in both hospitals and private practice offices. As you note, the need for interfaces to these devices is so the data they generate can be associated with the proper patient record in the EMR. This not only allows a physician to have a more complete picture of the patients' status, but the efficiency of the entire clinical staff is vastly improved when they don't have to gather all of this information from multiple sources.

The answer to your second question is yes, many "labs" -- medical device companies, are actively in involved in the development of interoperability standards. The EMR companies are also major participants.

There are two fundamental problems with "standards" though:

- A standard is always a compromise.

- A standard is always evolving.

By their very design, the use of a standard will require the implementer to jump though at least a few hoops (some of which may be on fire). Also, a device-to-EMR interface you complete today will probably not work for the same device and EMR in a year from now -- one or both will be implementing the next generation standard by then.

Nobody dislikes standards. Interoperability is usually good for business. There are two primary reasons why a company might not embrace communications standards:

- The compromise may be too costly, either from a performance or resources point of view, so a company will just do it their own way.

- You build a proprietary system in order to explicitly lock out other players. This is a tactic used by large companies that provide end-to-end systems.

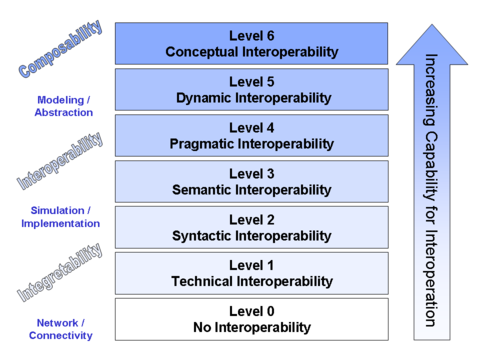

The "standards" problem is not just a Healthcare interoperability issue. The IT within every industry struggles with this. The complexity of Healthcare IT and its multi-faceted evolutionary path has just exacerbated the situation.

So, the answer is that everyone is working very hard to resolve these tough interoperability issues. Unfortunately, the nature of beast is such that it's going to take a long time for the solutions to become satisfactory.

UPDATE (9/3/08): The response to Inga was published here: Readers Write 9/3/08. Thanks Inga!