Bare with me, I'll get to the point of the title by the end of the post.

I primarily develop for Microsoft platform targets, so I have a lot of familiarity with Microsoft development tools and operating systems. I also work with Linux-based systems, but mostly on the systems administration side: maintaining servers for R&D tools like Trac and Subversion.

I recently had some interest in trying to use Mono running on Linux as .NET development platform.

This also allowed me to try Microsoft Virtual PC 2007 (SP1) on XP-SP3. I went to a local .NET Developer's Group (here) meeting a couple of weeks ago on Virtual PC technology. Being a Microsoft group most of the discussion was on running Microsoft OS's, but I saw the potential for using VPC running Linux for cross-platform development. My PC is an older Pentium D dual core without virtualization support, but it has 3Gig of RAM and plenty of hard disk space, so I thought I'd give it a try.

Download and installation of Ubuntu 8.04 (Hardy Heron) LTS Desktop on VPC-2007 is a little quirky, but there are many blog posts that detail what it takes to create a stable system: e.g. Installing Ubuntu 8.04 under Microsoft Virtual PC 2007. Other system optimizations and fixes are easily found, particularly on the Ubuntu Forums.

OK, so now I have a fresh Linux installation and my goal is to install a Mono development environment. I started off by following the instructions in the Ubuntu section from the Mono Other Downloads page. The base Ubuntu Mono installation does not include development tools. From some reading I found that I also had to install the compilers:

# apt-get install mono-mcs

# apt-get install mono-gmcs

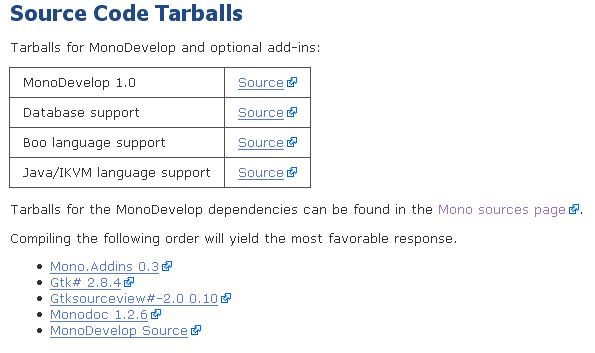

So now I move on to MonoDevelop. Here's what the download page looks like:

Here's my first gripe: Why do I have to download and install four other dependencies (not including the Mono compiler dependency that's not even mentioned here)?

Second gripe: All of the packages require unpacking, going to a shell prompt, changing to the unpack directory, and running the dreaded:

./configure

make

Also notice the line: "Compiling the following order will yield the most favorable response." What does that mean?

So I download Mono.Addins 0.3, unpack it, and run ./configure. Here's what I get:

configure: error: No al tool found. You need to install either the mono or .Net SDK.

This is as far as I've gotten. I'm sure there's a solution for this. I know I either forgot to install something or made a stupid error somewhere along the way. Until I spend the time searching through forums and blogs to figure it out, I'm dead in the water.

I'm not trying to single out the Mono project here. If you've even tried to install a Unix application or library you inevitably end up in dependency hell -- having to install a series of other packages that in turn require some other dependency to be installed.

So, to the point of this post: There's a lot of talk about why Linux, which is free, isn't more widely adopted on the desktop. Ubuntu is a great product -- the UI is intuitive, system administration isn't any worse than Windows, and all the productivity tools you need are available.

In my opinion, one reason is ./configure and make. If the open source community wants more developers for creating innovative software applications that will attract a wider user base, these have to go. I'm sure that the experience I've described here has turned away many developers.

Microsoft has their problems, but they have the distinct advantage of being able to provide a complete set of proprietary software along with excellent development tools (Visual Studio with ReSharper is hard to beat). Install them, and you're creating and deploying applications instantly.

The first step to improving Linux adoption has to be making it easier for developers to simply get started.