Any software controlled device that is attached to a human presents unique and potentially life threatening risks. A recent article on the use of static analysis for medical device software prompted Pascal Cuoq at Frama-C to share his thoughts on the subject. This is part 1 of 3.

The article Diagnosing Medical Device Software Defects Using Static Analysis gives an interesting overview of the applicability of static analysis to embedded medical software. I have some experience in the field of formal methods (including static analysis of programs), and absolutely none at all in the medical domain. I can see how it would be desirable to treat software involved at any stage of a medical procedure as critical, and coincidentally, producing tools for managing critical software has been my main occupation for the last five years. This blog post constitute the first part of what I have to say on the subject, and I hope someone finds it useful.

As the article states, in the development of medical software, as in many other embedded applications, C and C++ are used predominantly, for better or for worse. The "worse" part is an extensive list of subtle and less subtle pitfalls that seem to lay in each of these two languages' corner.

The most obvious perils can be avoided by restricting the programmer to a safer subset of the language -- especially if it is possible to recognize syntactically when a program has been written entirely in the desired subset. MISRA C, for instance, defines a set of rules, most of them syntactic, that help avoid the obvious mistakes in C. But only a minority of C's pitfalls can be eliminated so easily. A good sign that coding style standards are no silver bullet is that there exist so many. Any fool can invent theirs, and some have. The returns of mandating more and more coding rules diminish rapidly, to the point that overdone recommendations found in the wild contradict each other, or in the worst case, contradict common sense.

Even written according to a reasonable development standard, a program may contain bugs susceptible to result in run-time errors. Worse, such a bug may, in some executions, fail to produce any noticeable change, and in other executions crash the software. This lack of reproducibility means that a test may fail to reveal the problem, even if the problematic input vector is used.

A C program may yet hide other dangerous behaviors. The ISO 9899:1999 C standard, the bible for C compilers implementers and C analyzers implementers alike, distinguishes "undefined", "unspecified", and "implementation-defined" behaviors. Undefined behaviors correspond roughly to the run-time errors mentioned above. The program may do anything if one of these occurs, because it is not defined by the standard what it should do. A single undefined construct may cause the rest of the program to behave erratically in apparently unrelated ways. Proverbially, a standard-compliant compiler may generate a program that causes the device to catch fire when a division by zero happens.

Implementation-defined behaviors represent choices that are not imposed by the standard, but that have to be made by the compiler once and for all. In embedded software, it is not a viable goal to avoid implementation-defined constructions: the software needs to use them to interface with the hardware. Additionally, size and speed constraints for embedded code often force the developer to use implementation-defined constructs even where standard constructs exist to do the same thing.

However, in the development of critical systems, the underlying architecture and compiler are known before software development starts. Some static analysis techniques lend themselves well to this kind of parameterization, and many available tools that provide advanced static analysis can be configured for the commonly available embedded processors and compilers. Provided that the tests are made with the same compiler and hardware as the final device, the existence of implementation-defined behaviors does not invalidate testing as a quality assurance method, either.

Unspecified behaviors are not treated as seriously as they should by many static analysis tools. That's because unlike undefined behaviors, they cannot set the device on fire. Still, they can cause different results from one compilation to the other, from one execution to the other, or even, when they occur inside a loop, from one iteration to the other. Like the trickiest of run-time errors, they lessen the value of tests because they are not guaranteed to be reproducible.

The "uninitialized variable" example in the list of undesirable behaviors in the article is in fact an example of unspecified behavior. In the following program, the local variable L has a value, it is only unknown which one.

|

|

int main() { int L; return (L-L); } |

Computing (L-L) in this example reliably give a result of zero.

Note: For the sake of brevity, people who work in static analysis have a tendency to reduce their examples to the shortest piece of code that exhibits the problem. In fact, in writing this blog post I realized I could write an entire other blog post on the deformation of language in practitioners of static analysis. Coming back to the subject at hand, of course, no programmer wants to compute zero by subtracting an uninitialized variable from itself. But a cryptographic random generator might for instance initialize its seed variable by mixing external random data with the uninitialized value, getting at least as much entropy as provided by the external source but perhaps more. The (L-L) example should be considered as representing this example and all other useful uses of uninitialized values.

Knowledge of the compilation process and lower-level considerations may be necessary in order to reliably predict what happens when uninitialized variables are used. If the local variable L was declared of type float, the actual bit sequence found in it at run-time could happen to represent IEEE 754's NaN or one of the infinities, in which case the result of (L-L) would be NaN.

Uninitialized variables, and more generally unspecified behaviors, are indeed less harmful than undefined behaviors. Some "good" uses for them are encountered from time to time. We argue that critical software should not exhibit any unspecified behavior at all. Uses of uninitialized variables can be excluded by a simple syntactic rule "all local variables should be initialized at declaration", or, if material constraints on the embedded code mean this price is too high to pay, with one of the numerous static analyzers that reliably detect any use of an uninitialized variable. Note that because of C's predominant use of pointers, it may be harder than it superficially appears to determine if a variable is actually used before being initialized or not; and this is even in ordinary programs.

There are other examples of unspecified behaviors not listed in the article, such as the comparison of addresses that are not inside a same aggregate object, or the comparison of an invalid address to NULL. I am in fact still omitting details here. See the carefully worded §6.5.8 in the standard for the actual conditions.

An example of the latter unspecified behavior is (p == NULL) where p contains an invalid address computed as t+12345678 (t being a char array with only 10000000 cells). This comparison may produce 1 when t happens to have been located at a specific address by the compiler, typically UINT_MAX-12345677. It also produces 0 in all other cases. If there is an erroneous behavior that manifests itself only when this condition produces 1, a battery of tests is very unlikely to uncover the bug, which may remain hidden until after the device has been widely deployed.

An example of comparison of addresses that are not in the same aggregate object is the comparison (p <= q), when p and q are pointers to memory blocks that have both been obtained by separate calls to the allocation function malloc. Again, the result of the comparison depends on uncontrolled factors. Assume such a condition made its way by accident in a critical function. The function may have been unit-tested exhaustively, but the unit tests may not have taken into account the previous sequence of bloc allocations and deallocations that results in one block being positioned before or after the other in the heap. A typical static analysis tool is smarter, and may consider both possibilities for the result of the condition, but we argue that in critical software, the fact that the result is unspecified should in itself be reported as an error.

Another failure mode for programs written in C or any other algorithmic language is the infinite loop. In embedded software, one is usually interested in an even stronger property than the absence of infinite loops, the verification of a predetermined bound on the execution time of a task. Detection of infinite loops is a famous example of undecidable problem. Undecidable problems are problems for which it is mathematically impossible to provide an algorithm that for any input (here, a program to analyze) eventually answers "yes" or "no". People moderately familiar with undecidability sometimes assume this means it is impossible to make a static analyzer that provides useful information on the termination of an analyzed program, but the theoretical limitation can be worked around by accepting a little imprecision (false negatives, or false positives, or both, in the diagnostic), or by allowing that the analyzer itself will, in some cases, not terminate.

The same people who recognize termination of the analyzed program as an undecidable property for which theory states that a perfect analyzer cannot be made, usually fail to recognize that finely recognizing run-time errors or unspecified behaviors are undecidable problems as well. For these questions, it is also mathematically impossible to build an analyzer that always terminates and emits neither false positives nor false negatives.

Computing the worse-case execution time is at least as hard as verifying termination, therefore it's undecidable too. That's for theory. In practice, there exist useful static analyzers that provide guaranteed worse case execution times for the execution of a piece of software. They achieve this by limiting the scope of the analysis, firstly, to the style of code that is common in embedded software, and secondly, to the one sub-task whose timing is important. This kind of analysis cannot be achieved using the source code alone. The existing analyzers all use the binary code of the task at some point, possibly in addition to the source code, a sample of the processor to be used in the device, or only an abstract description of the processor.

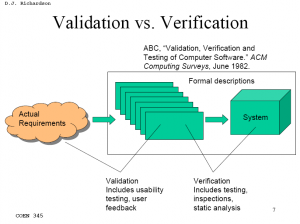

This was part one of the article, where I tried to provide a list of issues to look for in embedded software. In part two, I plan to talk about methodology. In part three, I will introduce formal specifications, and show what they can contribute to the issue of software verification.

The Technology Review article Better Brain-Wave Analysis looks at start-up ElMindA that is trying to find new quantitative methods for broadening the clinical use of EEG.

The Technology Review article Better Brain-Wave Analysis looks at start-up ElMindA that is trying to find new quantitative methods for broadening the clinical use of EEG.

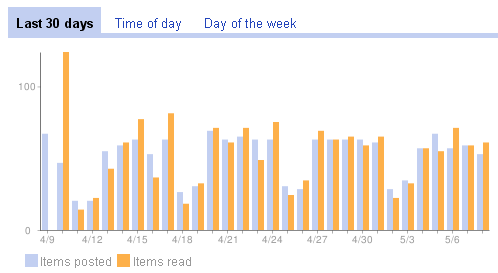

You can see I'm pretty consistent. That's 1605 posts in 30 days, or about 53 posts per day. To some, this may seem like a lot. To others, I'm a wimp. During the week I usually read them over lunch or in the evening.

You can see I'm pretty consistent. That's 1605 posts in 30 days, or about 53 posts per day. To some, this may seem like a lot. To others, I'm a wimp. During the week I usually read them over lunch or in the evening.

It's not easy getting your arms around this one. The term

It's not easy getting your arms around this one. The term