The reverse psychology I used in Failure to Launch: Healthcare IT Q&A is finally starting to work. The question definitions are complete and the commitment phase has begun:

Go over and sign up today.

The reverse psychology I used in Failure to Launch: Healthcare IT Q&A is finally starting to work. The question definitions are complete and the commitment phase has begun:

Go over and sign up today.

Here's an interesting analysis of security threats within a Windows-based hospital network for embedded medical devices: A threat analysis of critical patient monitoring medical devices.

The threat models are fairly complex and clearly a product of wider enterprise network IT security needs. I've discussed some of the other issues of putting medical devices on an institutional network in Networked Medical Devices. Security threats were not covered and this is an important topic for every hospital network.

There are a couple of items in this article worth commenting on.

The top five unmitigated threats were found to be:

The corrective action for the top threat (T002) was (my highlight):

After it was decided to remove all ePHI from the medical device data storage, the risk assessment changed and the threat of the medical device infecting the hospital enterprise network (T017) then became our primary concern.

This may be the "most effective countermeasure possible for HIPAA compliance and protecting patient privacy", but it is a not practical solution in the real world. Many medical devices store patient demographics. Because the benefits of patient identification outweigh the security risks, this practice is not likely to change in the future.

On these questions:

I generally agree with the conclusions for the device under analysis. The challenge for a hospital is how do you ensure that every networked medical device follows these best practices (communications integrity, hardened OS, clean distribution media, etc.)?

Software development is software development. Most of the life cycle and quality issues faced in medical software are the same challenges for any software product. Technical Debt in Medical Software points out what technical debt is:

A Martin Fowler article is referenced that nicely identifies the source of technical debt:

The benefits of paying down the debt are:

Of course if you don't want to pay it off, there's always the option to go bankrupt. This may have long-term advantages, but it will surely be a more expensive route. There is one statement in this regard that I think needs some qualification:

In this case the technical debt can be retired along with the legacy system, and like filing Chapter 11, you are no longer responsible to address all the sins of the past.

I know this refers to code sins, but just because you decide to do a re-write doesn't mean you no longer have responsibility for the legacy product. You still have customers using the old software that you're obligated to continue to support. For FDA approved medical software, this is a legal requirement. Most of the time this means that the legacy code will need to be maintained and periodically updated in the field, sometimes even after the "new" product is released. This just makes the cost of bankruptcy even higher.

It seems like a great concept (to me anyway). Grow a community of like-minded Healthcare IT geeks that want to participate in an on-line Q&A site which rewards contribution and facilitates constructive dialog. As of today, it appears unlikely this will happen anytime soon.

Even after being endorsed on HISTalk News 6/25/10 less than 900 people have visited the site.

The attraction that programmers have for Stack Overflow just doesn't translate for this group of professionals. I suppose it's the nature of the business.

Anyway, it's really too bad there isn't a way for a site like this to gain traction. It would be a valuable HIT resource if it could get off the ground.

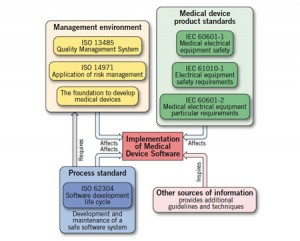

The FDA approved ISO 62304 as a recognized software development standard in 2009. Developing Medical Device Software to ISO 62304 gives a nice overview.

The FDA approved ISO 62304 as a recognized software development standard in 2009. Developing Medical Device Software to ISO 62304 gives a nice overview.

Besides providing a globally accepted development process one of the other practical components is the assignment of a safety class to individual software items and units:

Each classification changes the required documentation for the assigned software.

These standards will become more widely known as the FDA moves to regulate the proliferation of medical applications for personal and home use, most notably software that runs on mobile devices. I've discussed this before in When Cell Phones Become Medical Devices. As noted more recently in FDA oversight may extend throughout health IT:

... an FDA director stated flatly: "Under the Federal Food, Drug and Cosmetic Act, HIT software is a medical device."

Broad FDA oversight at the QSR/62304 level will probably not happen, but change is certainly coming for many HIT companies.

The Elsmar Cove Forum IEC 62304 - Medical Device Software Life Cycle Processes has a lot of discussion on this topic. This is where I found a document checklist that is useful for understanding the process scope:

IEC62304_Checklist.xls (Excel spreadsheet)

UPDATE (9/9/10): IEC 62304 – The Basics

Andrew Dallas's article Caution: V&V May Be Hazardous to Software Quality touches on a number of good points regarding software quality best practices.

Medical device software development V&V (also see here) and the documentation that goes with it have substantial costs. Any strategy that can reduce this overhead and still meet the necessary quality standards should be seriously considered.

The use of "incremental" software development approaches really refers to Agile methodologies. I've talked about the use of Agile for medical device software development several times:

Most of the discussion revolves around the risks associated with this approach. The benefits of any process change have to be weighed against the possible risks that might be introduced.

Besides the importance of understanding what V&V documentation the FDA actually wants to see, Andrew makes a great point about producing quality software versus the V&V process (my highlight):

V&V is not software testing. Verification testing ensures specified requirements have been fulfilled. Validation testing ensures that particular requirements for a specific intended use can be consistently fulfilled.

Following the required FDA V&V processes alone is not sufficient to ensure software quality. You also have to adhere to software development best practices at all levels. For example, in addition to non-functional requirements there are many software quality factors that require careful design considerations and testing that you may decide are outside the scope of FDA reporting. Deciding what to report and what to leave out is the balancing act.

There was a statement in one of the HIStalk Readers Write 5/10/10 articles in that I haven't been able to get out of my mind. In Digging for Gold in your HIT Applications, Ron Olsen writes:

There was a statement in one of the HIStalk Readers Write 5/10/10 articles in that I haven't been able to get out of my mind. In Digging for Gold in your HIT Applications, Ron Olsen writes:

One of the most over-used buzz words in healthcare IT is “interoperability,” a is really a big word that self-important people use to describe data transfer.

OMG, I've been using that word for a long time...

All joking aside, for the most part Mr Olsen's advise to get more out of existing IT tools is a reasonable suggestion. Unfortunately, interoperability means a lot more than just "data transfer" (see Healthcare IT Interoperability Defined), and is where the advise breaks down.

Scripting tools can manipulate those files, turning them into almost any format imaginable. With the correct format, data can be transferred to disparate systems, individually or concurrently, via a data stream.

The fundamental flaw in this statement is the oversimplification (sorry, another big word) of the problem. Simple scripts are good for simple tasks. Communicating medical data reliably and securely between disparate systems is not a simple task.

I would also encourage all HIT professionals to fully understand the tools at their disposal in order to improve the efficiency and effectiveness of their organizations. There may be a few nuggets, I'm not so sure that there will be a whole lot of gold to be found when it comes to interoperability.

Yours truly was interviewed for this article:

To Validate and Verify: Software Issues Solved

"V&V" is one of those topics that should be simple to understand, but for some reason is the source of a lot of confusion. This is evident in the comments on Software Verification vs. Validation.

It is also interesting to note that the differing interpretations of these definitions results in a wide variety of V&V strategies and plans. From a regulatory point of view there is no single right or wrong way to do it. It's similar to the implementation of quality systems in general. If you say you are going to do something you need to be able to prove that you're actually doing it.

The IntendiX (by g.tec) is a BCI device that uses visual evoked potentials to "type" messages on a keyboard.

The IntendiX (by g.tec) is a BCI device that uses visual evoked potentials to "type" messages on a keyboard.

The system is based on visually evoked EEG potentials (VEP/P300) and enables the user to sequentially select characters from a keyboard-like matrix on the screen just by paying attention to the target for several seconds.

P300 refers to the event related averaged potential deflection that occurs between 300 to 600 ms after a stimuli. This is a BCI research platform that has been made into a commercial reality. The system includes useful real-life features:

Besides writing a text the patient can also use the system to trigger an alarm, let the computer speak the written text, print out or copy the text into an e-mail or to send commands to external devices.

I'm usually skeptical of "mind reading" device claims (e.g. here), but P300-based technology has many years of solid research behind it. It may be pricey ($12,250) and typing 5 to 10 characters per minute may not sound very exciting, but this device would be a huge leap for disabled patients that have the cognitive ability but no other way of communicating.

(hat tip: medGadget)

UPDATE (3/24/10): Mind Speller Lets Users Communicate with Thought

Pascal Cuoq at Frama-C continues his discussion of static analysis for medical device software. Also see Part 1 and Part 2.

In May 2009, I alluded to a three-part blog post on the general topic of static analysis in medical device software. The ideas I hope will emerge from this third and last part are:

Going back to point one, a "functional specification" is a description of what a system/subsystem does. I really mostly intend to talk about formal versions of functional specifications. This only means that the description is written in a machine-parsable language with an unambiguous grammar. The separation between formal and informal specifications is not always clear-cut, but this third blog entry will try to convince you of the advantages of specifications that can be handled mechanically.

Near the bottom of the V development cycle, "subsystem" often means software: a function, or a small tree of functions. A functional specification is a description of what a function (respectively, a tree of functions) does and does not (the time they take to execute, for instance, is usually not considered part of the functional specification, although whether they terminate at all can belong in it. It is only a matter of convention). The Wikipedia page on "Design by Contract" lists the following as making up a function contract, and while the term is loaded (it may evoke Eiffel or run-time assertion checking, which are not specifically the topic here), the three bullet points below are a good categorization of what functional specifications are about:

I am tempted to point out that invariants maintained by a function can be encoded in terms of things that the function expects and things that the function guarantees, but this is exactly the kind of hair-splitting that I am resolved to give up on.

The English sentence "when called, this function may modify the global variables G and H, and no other" is almost unambiguous and rigorous — assuming that we leave aliasing out of the picture for the moment. Note that while technically something that the function ensures on return (it ensures that for any variable other than G or H, the value of the variable after the call is the same as its value before the call), this property can be thought of more intuitively as something that the function maintains.

The enthusiastic specifier may like the sentence "this function may modify the global variables G and H, and no other" so much that he may start copy-pasting the boilerplate part from one function to another. Why should he take the risk to introduce an ambiguity accidentally? Re-writing from memory may lead him to forget the "may" auxiliary, when he does not intend to guarantee that the function will overwrite G and H each time it is called. Like for contracts of a more legal nature, copy-pasting is the way to go. The boilerplate may also include jargon that make it impossible to understand by someone who is not from the field, or even from the company, whence the specifications originate. Ordinary words may be used with a precise domain-specific meaning. All reasons not to try to paraphrase and to reuse the specification template verbatim.

The hypothetical specifier may particularly appreciate that the specification above is not only carefully worded but also that a list of possibly modified globals is part of any wholesome function specification. He may — rightly, in my humble opinion — endeavor to use it for all the functions he has to specify near the end of the descending branch of the V cycle. This is when he is ripe for the introduction of a formal syntax for functional specifications. According to Wikipedia, Robert Recorde introduced the equal sign "to auoide the tediouſe repetition of [...] woordes", and the sentence above is a tedious repetition begging for a sign of its own to replace it. When the constructs of the formal language are well-chosen, the readability is improved, instead of being diminished.

A natural idea for to express the properties that make up a function contract is to use the same language as for the implementation. Being a programming language, it is suitably formal; the specifier, even if he is not the programmer, is presumably already familiar with it; and the compiler can transform these properties into executable code that checks that preconditions are properly assured by callers, and that the function does its own part by returning results that satisfy its postconditions. This choice can be recognized in run-time assertion checking, and in Test-Driven Development (in Test-Driven Development, unit tests and expected results are written before the function's implementation and are considered part of the specification).

Still, the choice of the programming language as the specification language has the disadvantages of its advantages: it is a programming language; its constructs are optimized for translation to executable code, with intent of describing algorithms. For instance, the "no global variable other than G and H is modified" idiom, as expressed in C, is a horrible way to specify a C function. Surely even the most rabid TDD proponent would not suggest it for a function that belongs in the same C file as a thousand global variable definitions.

A dedicated specification language has the freedom to offer exactly the constructs that make it pleasant to write specifications in it. This means directly including constructs for commonly recurring properties, but also providing the building blocks that make it possible to define new constructs for advanced specifications. So a good specification language has much in common with a programming language.

A dedicated specification language may for instance offer

|

1 |

/*@ assigns G, H */ |

as a synonym for the boilerplate function specification above, and while this syntax may seem wordy and redundant to the seat-of-the-pants programmer, I hope to have convinced you that in the context of structured development, it fares well in the comparison with the alternatives. Functional specifications give static analyzers that understand them something to chew on, instead of having to limit themselves to the absence of run-time errors. This especially applies to correct static analyzers as emphasized in part 2 of this oversize blog post.

Third parties that contact us often are focused on using static analysis tools to do things they weren't doing before. It is a natural expectation that a new tool will allow you to do something new, but a more accurate description of our activity is that we aim to allow to do the same specification and verification that people are already doing (for critical systems), better. In particular, people who discover tools such as Frama-C/Jessie or other analysis tools based on Hoare-Floyd precondition computations often think these tools are intended for verifying, and can only be useful for verifying, complete specifications.

A complete specification for a function is a specification where all the properties expected for the function's behavior have been expressed formally as a contract. In some cases, there is only one function (in the mathematical sense) that satisfies the complete specification. This does not prevent several implementations to exist for this unique mathematical function. More importantly, it is nice to be able to check that the C function being verified is one of them!

Complete specifications can be long and tedious to write. In the same way that a snippet of code can be shorter than the explanation of what it does and why it works, a complete specification can sometimes be longer than its implementation. And it is often pointed out that these specifications can be so large that once written, it would be too difficult to convince oneself that they do not themselves contain a bug.

But just because we are providing a language that would allow you to write complete specifications does not mean that you have to. It is perfectly fine to write minimal formal specifications accompanied with informal descriptions. To be useful, the tools we are proposing only need to do better than testing (the most widely used verification technique at this level). Informal specifications traditionally used as the basis for tests are not complete either. And there may be bugs in both the informal specification or in its translation into test cases.

If anything, the current informal specifications leave out more details and contain more bugs, because they are not machine-checked in any way. The static analyzer can help find bugs in a specification in the same way that a good compiler's sanity checks and warnings help avoid the stupidest bugs in a program.

In particular, because they are written in a dedicated specification language, formal specifications have better composition properties than, say, C functions. A bug in the specification of one function is usually impossible to overlook when trying to use said specification in the verification of the function's callers. Taking an example from the tutorial/library (authored by our colleagues at the applied research institute Fraunhofer FIRST) ACSL by example, the specification of the max_element function is quite long and a bug in this specification may be hard to detect. The function max_element finds the index of the maximum element in an array of integers. The formal version of this specification is complicated by the fact that it specifies that if there are several maximum elements, the function returns the first one.

Next in the document a function max_seq for returning the value of the maximum element in an array is defined. The implementation is straightforward:

|

1 2 3 4 |

int max_seq(const int* p, int n) { return p[max_element(p, n)]; } |

The verification of max_seq builds on the specification for max_element. This provides additional confidence: the fact that max_seq was verified successfully makes a bug in the specification of max_element unlikely. Not only that, but if the (simpler, easier to trust) specification for max_seq were the intended high-level property to verify, it wouldn't matter that the low-level specification for max_element was not exactly what the specifier intended (say, if there was an error in the specification of the index returned in case of ties): the complete system still has no choice but to behave as intended in the high-level specification. Unlike a compiler that lets you put together functions with incompatible expectations, the proof system always ensures that the contract used at the call point is the same as the contract that the called function was proved to satisfy.

And this concludes what I have to say on the subject of software verification. The first two parts were rather specific to C, and would only apply to embedded code in medical devices. This last part is more generic — in fact, it is easier to adapt the idea of functional specifications for static verification to high-level languages such as C# or Java than to C. Microsoft is pushing for the adoption of its own proposal in this respect, Code Contracts. Tools are provided for the static verification of these contracts in the premium variants of Visual Studio 2010. And this is a good time to link to this video. Functional specifications are a high-level and versatile tool, and can help with the informational aspects of medical software as well as for the embedded side of things. I would like to thank again my host Robert Nadler, my colleague Virgile Prevosto and department supervisor Fabrice Derepas for their remarks, and twitter user rgrig for the video link.