Last week I attended the WLSA/Continua Mobile Healthcare Symposium and the opening day of the Continua Health Alliance Winter Summit 2010. Also, a couple of weeks ago I attended a few of the FDA Workshop on Medical Device Interoperability: Achieving Safety and Effectiveness sessions via a Webcast*.

Since I'm not going to HIMSS in Atlanta this year (starts Mar. 1) I thought now would be a good time to do some venting.

I've talked about HIT problems before, e.g. Healthcare Un-Interoperability and The EMR-Medical Devices Mess. With all of the ARRA/HITECH talk along with the National Healthcare debate raging it made me wonder how the issues facing device interoperability, wireless Healthcare, and HIT in general really fit in to the bigger picture.

After sitting though multiple sessions on a wide variety of topics presented by smart people the obvious hit me in the face: The complexity of the issues are mind numbing. Everybody has good (and even great) ideas, but nobody has real solutions. Why is it that all this good HIT hasn't translated into meaningful improvements in Healthcare?

For example. At first I thought the talk by Dr. Patrick Soon-Shiong might be heading somewhere interesting. He presented a well structured view of the current Healthcare landscape that seemed to make a lot of sense. Then he plunged into the abyss with an in-depth discussion of transformational technologies (molecular data mining, Visual Evoked Potentials, etc.). These developments could potentially lead to improvements in people's health, but we never got to hear how any of the complex Healthcare delivery issues were going to be addressed.

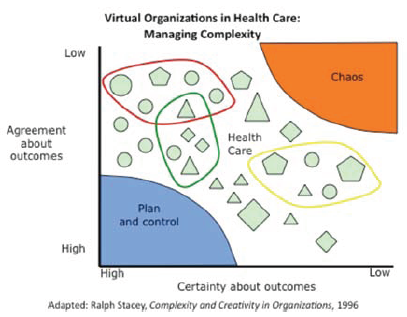

Among his many endeavors Dr. Soon-Shiong is Chairman of the National Coalition for Health Integration (NCHI). I think the "Zone of Complexity" point of view (see here -- warning PDF) is a good starting point for understanding the position that Healthcare IT is in:

Also, following the diagram above is this statement:

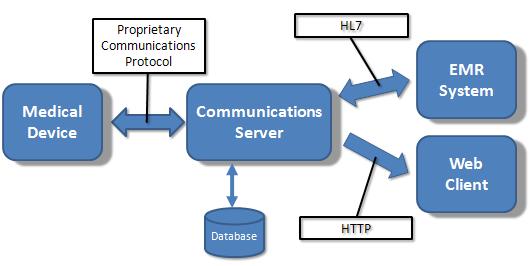

However, currently, even when information is in digital formats, data are not accessible because they reside in different “silos” within and between organizations. In turn, the U.S. health system is hampered by inefficient virtual organizations that lack the mechanisms needed to engage in coordinated action.

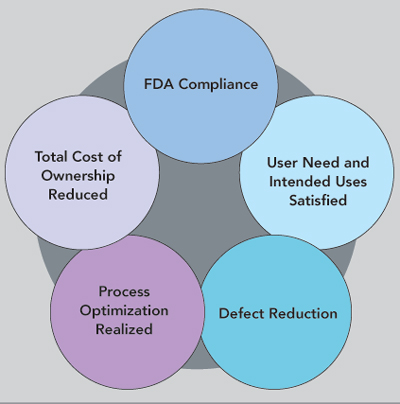

The NCHI Integrated Health Platform (grid computing) is a good idea, but does it really even begin to provide the solution to these complex problems?

- They are taking a "bottom-up" approach to interoperability (system, data , and process) and trying to leverage existing technologies (like DICOM and HL7). Makes sense. But other than academic or government institutions what's the incentive for private companies (like EMRs) to participate?

- How is an improved underlying infrastructure going to reduce the chaotic nature of the health delivery system (hospitals, insurance companies, Medicare, etc.)? It's like putting the cart before the horse.

This is the dilemma. We can come up with clever and even ingenious technical solutions in our little IT world, but none of them are going to be game changers. The availability of a great technologies are not enough to change the institutional processes that make an organization inefficient or communication ineffective.

The solution is in the people and the processes they follow. The best example I can think of is EMR adoption. Everybody knows why the rate of conversion from a paper to a paperless office is so low. It's mostly because of people's resistance to change the way they've "always done it." Change is hard, and in this case HIT is the barrier to adoption, no mater how good the EMR solution is.

At the national level Healthcare IT only enables interoperability and improved data management. The chaos can only be solved by first changing U.S. Healthcare delivery policies. Whatever the changes are, they will then determine the incentives and processes that actually drive the system and put HIT to use.

For Healthcare IT, the NCHI is just one example. There are a whole bunch of other technology-driven initiatives that also have high hopes. I'm not saying we should stop developing great technologies. We just shouldn't be surprised when they don't change the world.

Happy Presidents Day!

UPDATE (8/4/10): Martin Fowler's UtilityVsStrategicDichotomy post is another perspective on "IT Doesn't Matter".

*I thought the Webcast was very well done. It had split screen (speaker and slides) along with multiple camera views that included the audience. The video quality wasn't great (it really didn't need to be) but the streaming was reliable. Also, the web participants could chat among themselves and the on-site staff and ask the speaker questions.

The issues raised in Tim's post

The issues raised in Tim's post