Pascal Cuoq at Frama-C continues his discussion of static analysis for medical device software. Also see Part 1 and Part 2.

In May 2009, I alluded to a three-part blog post on the general topic of static analysis in medical device software. The ideas I hope will emerge from this third and last part are:

- Formal specifications are good,

- Partial formal specifications are underrated, and

- One should never commit in advance to writing anything, however easy it seems it will be at the time.

Going back to point one, a "functional specification" is a description of what a system/subsystem does. I really mostly intend to talk about formal versions of functional specifications. This only means that the description is written in a machine-parsable language with an unambiguous grammar. The separation between formal and informal specifications is not always clear-cut, but this third blog entry will try to convince you of the advantages of specifications that can be handled mechanically.

Near the bottom of the V development cycle, "subsystem" often means software: a function, or a small tree of functions. A functional specification is a description of what a function (respectively, a tree of functions) does and does not (the time they take to execute, for instance, is usually not considered part of the functional specification, although whether they terminate at all can belong in it. It is only a matter of convention). The Wikipedia page on "Design by Contract" lists the following as making up a function contract, and while the term is loaded (it may evoke Eiffel or run-time assertion checking, which are not specifically the topic here), the three bullet points below are a good categorization of what functional specifications are about:

- What does the function expect, what rules should the caller obey at the time of calling it?

- What does the function guarantee, what is the caller allowed to expect from the function's results?

- What properties does the function maintain?

I am tempted to point out that invariants maintained by a function can be encoded in terms of things that the function expects and things that the function guarantees, but this is exactly the kind of hair-splitting that I am resolved to give up on.

The English sentence "when called, this function may modify the global variables G and H, and no other" is almost unambiguous and rigorous — assuming that we leave aliasing out of the picture for the moment. Note that while technically something that the function ensures on return (it ensures that for any variable other than G or H, the value of the variable after the call is the same as its value before the call), this property can be thought of more intuitively as something that the function maintains.

The enthusiastic specifier may like the sentence "this function may modify the global variables G and H, and no other" so much that he may start copy-pasting the boilerplate part from one function to another. Why should he take the risk to introduce an ambiguity accidentally? Re-writing from memory may lead him to forget the "may" auxiliary, when he does not intend to guarantee that the function will overwrite G and H each time it is called. Like for contracts of a more legal nature, copy-pasting is the way to go. The boilerplate may also include jargon that make it impossible to understand by someone who is not from the field, or even from the company, whence the specifications originate. Ordinary words may be used with a precise domain-specific meaning. All reasons not to try to paraphrase and to reuse the specification template verbatim.

The hypothetical specifier may particularly appreciate that the specification above is not only carefully worded but also that a list of possibly modified globals is part of any wholesome function specification. He may — rightly, in my humble opinion — endeavor to use it for all the functions he has to specify near the end of the descending branch of the V cycle. This is when he is ripe for the introduction of a formal syntax for functional specifications. According to Wikipedia, Robert Recorde introduced the equal sign "to auoide the tediouſe repetition of [...] woordes", and the sentence above is a tedious repetition begging for a sign of its own to replace it. When the constructs of the formal language are well-chosen, the readability is improved, instead of being diminished.

A natural idea for to express the properties that make up a function contract is to use the same language as for the implementation. Being a programming language, it is suitably formal; the specifier, even if he is not the programmer, is presumably already familiar with it; and the compiler can transform these properties into executable code that checks that preconditions are properly assured by callers, and that the function does its own part by returning results that satisfy its postconditions. This choice can be recognized in run-time assertion checking, and in Test-Driven Development (in Test-Driven Development, unit tests and expected results are written before the function's implementation and are considered part of the specification).

Still, the choice of the programming language as the specification language has the disadvantages of its advantages: it is a programming language; its constructs are optimized for translation to executable code, with intent of describing algorithms. For instance, the "no global variable other than G and H is modified" idiom, as expressed in C, is a horrible way to specify a C function. Surely even the most rabid TDD proponent would not suggest it for a function that belongs in the same C file as a thousand global variable definitions.

A dedicated specification language has the freedom to offer exactly the constructs that make it pleasant to write specifications in it. This means directly including constructs for commonly recurring properties, but also providing the building blocks that make it possible to define new constructs for advanced specifications. So a good specification language has much in common with a programming language.

A dedicated specification language may for instance offer

|

1 |

/*@ assigns G, H */ |

as a synonym for the boilerplate function specification above, and while this syntax may seem wordy and redundant to the seat-of-the-pants programmer, I hope to have convinced you that in the context of structured development, it fares well in the comparison with the alternatives. Functional specifications give static analyzers that understand them something to chew on, instead of having to limit themselves to the absence of run-time errors. This especially applies to correct static analyzers as emphasized in part 2 of this oversize blog post.

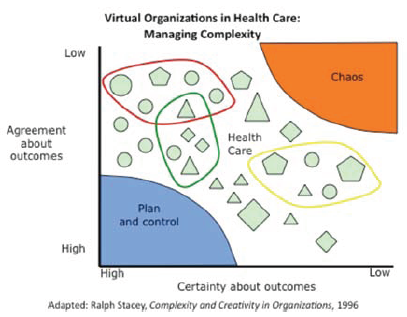

Third parties that contact us often are focused on using static analysis tools to do things they weren't doing before. It is a natural expectation that a new tool will allow you to do something new, but a more accurate description of our activity is that we aim to allow to do the same specification and verification that people are already doing (for critical systems), better. In particular, people who discover tools such as Frama-C/Jessie or other analysis tools based on Hoare-Floyd precondition computations often think these tools are intended for verifying, and can only be useful for verifying, complete specifications.

A complete specification for a function is a specification where all the properties expected for the function's behavior have been expressed formally as a contract. In some cases, there is only one function (in the mathematical sense) that satisfies the complete specification. This does not prevent several implementations to exist for this unique mathematical function. More importantly, it is nice to be able to check that the C function being verified is one of them!

Complete specifications can be long and tedious to write. In the same way that a snippet of code can be shorter than the explanation of what it does and why it works, a complete specification can sometimes be longer than its implementation. And it is often pointed out that these specifications can be so large that once written, it would be too difficult to convince oneself that they do not themselves contain a bug.

But just because we are providing a language that would allow you to write complete specifications does not mean that you have to. It is perfectly fine to write minimal formal specifications accompanied with informal descriptions. To be useful, the tools we are proposing only need to do better than testing (the most widely used verification technique at this level). Informal specifications traditionally used as the basis for tests are not complete either. And there may be bugs in both the informal specification or in its translation into test cases.

If anything, the current informal specifications leave out more details and contain more bugs, because they are not machine-checked in any way. The static analyzer can help find bugs in a specification in the same way that a good compiler's sanity checks and warnings help avoid the stupidest bugs in a program.

In particular, because they are written in a dedicated specification language, formal specifications have better composition properties than, say, C functions. A bug in the specification of one function is usually impossible to overlook when trying to use said specification in the verification of the function's callers. Taking an example from the tutorial/library (authored by our colleagues at the applied research institute Fraunhofer FIRST) ACSL by example, the specification of the max_element function is quite long and a bug in this specification may be hard to detect. The function max_element finds the index of the maximum element in an array of integers. The formal version of this specification is complicated by the fact that it specifies that if there are several maximum elements, the function returns the first one.

Next in the document a function max_seq for returning the value of the maximum element in an array is defined. The implementation is straightforward:

|

1 2 3 4 |

int max_seq(const int* p, int n) { return p[max_element(p, n)]; } |

The verification of max_seq builds on the specification for max_element. This provides additional confidence: the fact that max_seq was verified successfully makes a bug in the specification of max_element unlikely. Not only that, but if the (simpler, easier to trust) specification for max_seq were the intended high-level property to verify, it wouldn't matter that the low-level specification for max_element was not exactly what the specifier intended (say, if there was an error in the specification of the index returned in case of ties): the complete system still has no choice but to behave as intended in the high-level specification. Unlike a compiler that lets you put together functions with incompatible expectations, the proof system always ensures that the contract used at the call point is the same as the contract that the called function was proved to satisfy.

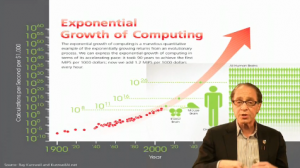

And this concludes what I have to say on the subject of software verification. The first two parts were rather specific to C, and would only apply to embedded code in medical devices. This last part is more generic — in fact, it is easier to adapt the idea of functional specifications for static verification to high-level languages such as C# or Java than to C. Microsoft is pushing for the adoption of its own proposal in this respect, Code Contracts. Tools are provided for the static verification of these contracts in the premium variants of Visual Studio 2010. And this is a good time to link to this video. Functional specifications are a high-level and versatile tool, and can help with the informational aspects of medical software as well as for the embedded side of things. I would like to thank again my host Robert Nadler, my colleague Virgile Prevosto and department supervisor Fabrice Derepas for their remarks, and twitter user rgrig for the video link.

When we moved from Madison to San Diego in 2005, that was a big change. Of course in Jan/Feb the 70 deg temperature difference makes that decision seem pretty smart. When our 12 y/o golden retriever Miles passed away this past Oct. that change really sucked.

When we moved from Madison to San Diego in 2005, that was a big change. Of course in Jan/Feb the 70 deg temperature difference makes that decision seem pretty smart. When our 12 y/o golden retriever Miles passed away this past Oct. that change really sucked.